Difference between revisions of "Optimal Control Problem"

TobiasWeber (Talk | contribs) |

TobiasWeber (Talk | contribs) (→Constraints) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 116: | Line 116: | ||

=== Objective === | === Objective === | ||

| − | To specify the [[:Category:Objective characterization|objective | + | To specify the [[:Category:Objective characterization|objective]] the Mayer and/or Lagrange term has to be described. For a parameter estimation problem the objective would be a finite sum over the squared difference between the measurements and the model response: |

<p> | <p> | ||

| Line 132: | Line 132: | ||

</p> | </p> | ||

| − | Here the weighting matrix <math>S</math> has to be given. For an tracking type | + | Here the weighting matrix <math>S</math> has to be given. For an [[:Category:Tracking objective|tracking type objective]] with vector valued state and inputs an quadratic objective would be: |

<p> | <p> | ||

| Line 160: | Line 160: | ||

</p> | </p> | ||

| − | where one hast to state <math>x_0</math>. More complicated expressions like | + | where one hast to state <math>x_0</math>. More complicated expressions like [[:Category:Periodic|periodic constraints]]: |

<p> | <p> | ||

| Line 176: | Line 176: | ||

</p> | </p> | ||

| − | restricting hyperplanes: | + | and restricting hyperplanes: |

<p> | <p> | ||

Latest revision as of 17:30, 17 May 2016

This is a template page to show how a general Optimal Control Problem should be described on this website. In this first paragraph a short description of the problem is given. What is the practical or mathematical context that motivates to solve the problem. What do the free and dependent variables of the optimization problem describe. What kind of model (equality constraints) fix the dependent variables (ODE, RDE, DAE, ...). What is the objective? Also one can cite a paper that introduced or solved this problem just by saying:

Max Mustermann, "This paper doesn't exist!", Jornal of myths and tails, Eds. Nobody and No-one, -1919, pp. -1-11.

Contents

[hide]Model Formulation

In this section the dynamic model, that describes the physical or technical process of interest, should be described. First the differential equation should be written down. Then the states, inputs, and parameters should be declared (Dimension, Type (integer, real, binary, ...)). Finally the parameters that are not free in the optimization and the coefficients should be stated in an extra table or list. All equations and functions are defined over an interval  . Initial values and free parameters are taken into account later in the Optimal Control Problem.

. Initial values and free parameters are taken into account later in the Optimal Control Problem.

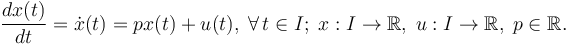

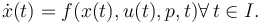

Ordinary Differential Equation (ODE)

For a simple scalar ODE model this would look like the following:

The parameter (coefficient)  could be stated in a table, if it is fixed.

could be stated in a table, if it is fixed.

| Name | Symbol | Value | Unit |

| Parameter |

|

23 | [-] |

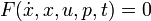

This would result in an right hand side function  in this case, because there are no free parameters. Of course one could also specify an implicit DAE system (

in this case, because there are no free parameters. Of course one could also specify an implicit DAE system ( ), where it just has to be said which states are differential and which are algebraic.

The states and inputs can also be vector valued functions requiring matrix valued coefficients.

), where it just has to be said which states are differential and which are algebraic.

The states and inputs can also be vector valued functions requiring matrix valued coefficients.

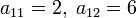

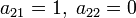

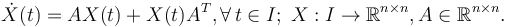

Matrix Differential Equation (MDE)

For an Lyapunov differential equation (with constant coefficient) one could write:

Here one also has to specify the matrix  (e.g. in a list - fixing the dimensions of all matrices).

(e.g. in a list - fixing the dimensions of all matrices).

- Coefficients of

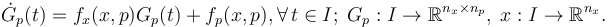

Variational Differential Equation (VDE)

For a VDE with respect to the parameters the equations would be:

In this case one has to specify the ODE model as done above, as well as the partial derivatives (which fixes all problem dimensions):

|

|

|

|

either in a table or as a list:

The nominal parameter values can be given as above in the ODE case, if they are fixed.

Optimal Control Problem

In this section the Optimal Control Problem (OCP) with the dynamic model as equality constraint is specified. In general every OCP has either a Lagrange or Mayer type objective or both. First the general structure of the OCP is given to show the type of objective (linear, quadratic, ...) and constraints (equality and/or inequality, linear or nonlinear) as well as the variables that are free to optimize. All of the above dynamic models can be used, in the following we just write for all:

Implicit systems would be treated similarly. Then the general OCP is writte as:

![\begin{array}{cl}

\displaystyle \min_{x, u, p} & J(x,u,t)\\[1.5ex]

\mbox{s.t.} & \dot{x} = f(x,u,p,t), \forall \, t \in I\\

& 0 = g(x(t_o),x(t_f),p) \\

& 0 \ge c(x,u,p), \forall \, t \in I\\

& 0 = h(x,u,p), \forall \, t \in I \\

& x \in \mathcal{X},\,u \in \mathcal{U},\, p \in P.

\end{array}](https://mintoc.de/images/math/0/e/f/0ef5cab1b5821403785a34c7f3da8799.png)

This is the most general formulation, where the initial value, parameters, and inputs are free. The states and inputs are vectors (or matrices) of functions while the parameters are just a vector of variables. If the initial (or final) state is fixed, one just adds a constraint  and specifies the value(s) of

and specifies the value(s) of  in a list or table.

If the parameters are fixed, then one just removes them from the list of optimization variables and states their values in a list (same for point conditions inside of the interval

in a list or table.

If the parameters are fixed, then one just removes them from the list of optimization variables and states their values in a list (same for point conditions inside of the interval  ). If the OCP is a parameter estimation problem without inputs,

the inputs just dont appear in the problem above at all, neither as optimization variable nor as part of the constraints. In contrast if they are just fixed this is done by the equality constraints. In this setup the above problem is very general and by

stating the different objective functions and constraint functions one can cover a wide range of OCPs.

). If the OCP is a parameter estimation problem without inputs,

the inputs just dont appear in the problem above at all, neither as optimization variable nor as part of the constraints. In contrast if they are just fixed this is done by the equality constraints. In this setup the above problem is very general and by

stating the different objective functions and constraint functions one can cover a wide range of OCPs.

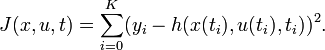

Objective

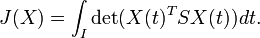

To specify the objective the Mayer and/or Lagrange term has to be described. For a parameter estimation problem the objective would be a finite sum over the squared difference between the measurements and the model response:

The output function  has to be specified as above in the model section. An objective with a matrix valued state could look like this:

has to be specified as above in the model section. An objective with a matrix valued state could look like this:

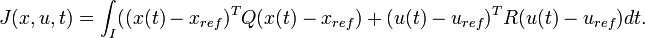

Here the weighting matrix  has to be given. For an tracking type objective with vector valued state and inputs an quadratic objective would be:

has to be given. For an tracking type objective with vector valued state and inputs an quadratic objective would be:

Surely demanding the specification of  and

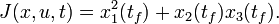

and  in a list or table. Also one can specify a Mayer term:

in a list or table. Also one can specify a Mayer term:

This all is quite general and has to be adapted to the needs for the special OCP of interest.

Constraints

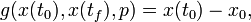

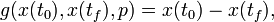

The dynamic constraints have to be given in the model section, however the initial (or final) state constraint  has to be specified. It can have a simple form:

has to be specified. It can have a simple form:

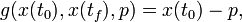

where one hast to state  . More complicated expressions like periodic constraints:

. More complicated expressions like periodic constraints:

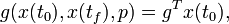

free initial states (as parameters):

and restricting hyperplanes:

are also possible. General expressions could also be used. Constraints working on the whole interval  can be also very general or just box constraints.

can be also very general or just box constraints.

The set for inputs and states should be used to define the function spaces these functions live in and other set constraints that can not be expressed as nonlinear functions. One could say that the inputs should be piecewise constant or piecewise linear continuous functions and the states should then be  or

or  . The set constraint on the parameters should be used to define all constraints on the parameters, if they are free to the optimization, otherwise it doesn't appear. This could look like:

. The set constraint on the parameters should be used to define all constraints on the parameters, if they are free to the optimization, otherwise it doesn't appear. This could look like:

Reference Solution

In this section one should provide reference solutions. First the discretization, the initial guess, the solution algorithm and the used hardware should be stated, as well as the solving time. Then the optimal solution should be given, at least as a PNG of the plots for the optimal trajectories. One should provide the optimal objective value.

Source Code

In this section one can provide the source code of the implementation, that solved the problem and produced the reference solution. This should be done by providing source files.

Nonlinear Model Predictive Control

If the OCP is solved repeatedly in a Nonlinear Model Predictive Control scheme here one can specify this outer loop configuration (sampling rate, observer, measurement error, measurement funktion, ...). Also reference solutions and code for this can be given to make the context the OCP comes from more clear. Robustness and other control specific matters like real-time requirements or model plant mismatch should also be described.